Specialization Review: Mathematics for Machine Learning

Probably you’ve already realized that for getting started with machine learning you’ll have to understand certain areas of math. I took the Mathematics of Machine Learning specialization on Coursera last year as the first step on that path. With this review, I’d like to help those people make a decision who are also thinking about taking it.

1. What you’ll learn?

MML is a three courses specialization taught by the Imperial College of London. It covers three areas of mathematics: linear algebra, multivariate calculus, and statistics. The latter focuses mostly on a specific technique called principal component analysis (PCA).

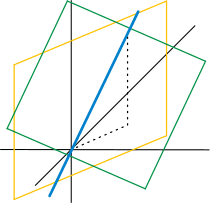

1.1. Linear algebra

Linear algebra plays an important role in working with data. Properties of real-world phenomena are often represented as a vector and such samples or measurements are organized as matrices. Professor David Dye demonstrates that through the example of estimating prices of real estate based on their characteristics, such as how many bedrooms they have, their area in square meters, etc.

Image courtesy of Jakob Scholbach

Image courtesy of Jakob Scholbach

In this first course, you’ll learn what vectors are, how they span space, what matrices are, and how they can operate on, and transform vectors. You’ll understand how solving linear equations through elimination is related to geometric interpretations of matrix transformations. The fifth and last module will introduce you to eigenvectors and eigenvalues that are useful for modeling processes that evolve in iterative steps (or in time). You’ll implement and analyze the PageRank algorithm with the help of eigenvalues.

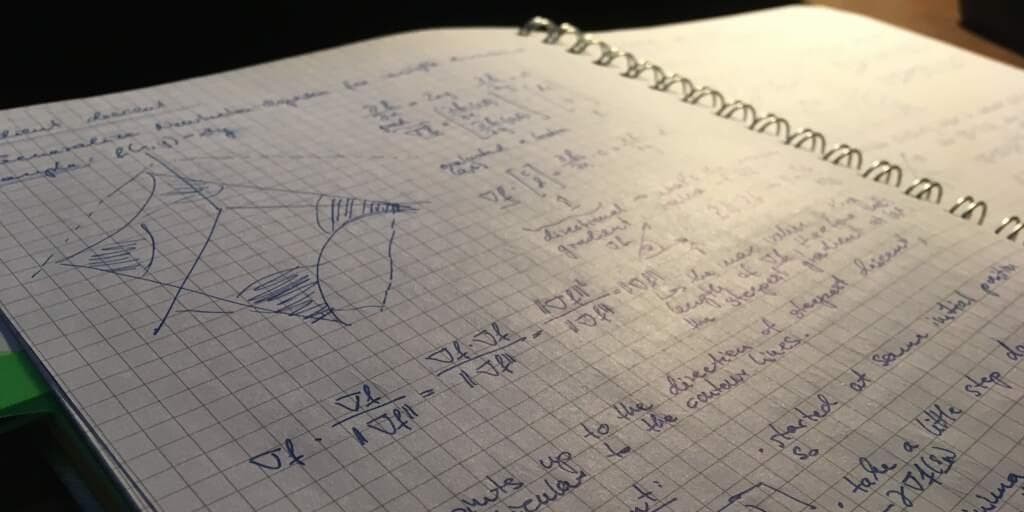

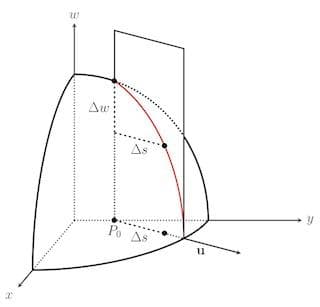

1.2. Multivariate calculus

A big part of machine learning is being able to fit a model to data, that fitting is done through minimizing some objective function or in other words minimizing the error. Thus, in finding the optimal solution, calculus has an important application in attempting to find parameter values where the fitting is best.

Image courtesy of John B. Lewis

Image courtesy of John B. Lewis

In this second course, Dr. Sam Cooper will introduce you to the basics of calculus in an intuitive manner, then you’ll move on to the multivariate case and to the Taylor series. From the second module of the course, you’ll see linear algebra applied as a means of dealing with the multivariate case. As an application of the multivariate chain rule, you learn how neural networks work and even implement one. In the last two modules, David Dye combines all that you’ve learned so far. You’ll see gradient descent in action, that’s the heart of ML algorithms and you’ll venture into the realm of statistics with linear and non-linear regression.

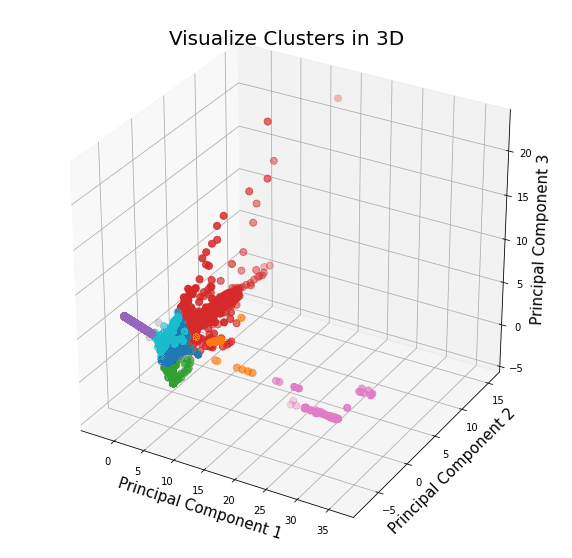

1.3. Principal Component Analysis

PCA is a technique to reduce the dimensionality of data. Sometimes certain aspects of the data are highly correlated and eventually, you can express the dataset with fewer features.

In this last course, Professor Marc Deisenroth will teach you some basics of statistics, like means and variances. Linear algebra will show appearances as a means of projecting data points to a lower-dimensional subspace and you’ll get your hands dirty by implementing PCA yourself in Python at the end.

2. Will you enjoy it?

Definitely, the specialization is very rewarding, it isn’t too hard, yet you’ll see that much to get the gist of what ML is about. The emphasis is mostly on building mathematical intuition and less or even not at all about grinding through formal proofs of theorems.

Especially for a guy like me who studied these subjects in the prussian-style eastern European education system, where teaching happens mostly on theorem-proof-basis, this was very enjoyable.

3. How hard is it?

The first course is super easy, I would even say that it’s even missing certain details that I would have found useful. Nevertheless, it’s not a full undergraduate-level course. The second one is a bit harder, but still not that much and there are lots of visualizations used as a means of demonstrating what the formulas are actually doing.

The last third course requires a more mathematical maturity and it’s also more abstract than the first two ones. It will require you to be more fluent in Python and at certain places you'll feel you're own your own when you do your homework. You'll also need to gather some details from external sources.

4. What additional resources are useful?

4.1. Textbooks

Mathematical Methods in the Physical Sciences is one of the recommended books for the first two courses. I managed to purchase a copy on eBay at a decent price.

Mathematics for Machine Learning is a book written by Professor Deisenroth and two others. At that time when I was taking the specialization, it wasn’t yet available in print, but I used the WiP online version. You can read the details of PCA in this book and of course, it’s also very useful for understanding linear algebra and multivariate calculus.

4.2. Courses

For the first course, Professor Gil Strang’s book Introduction to Linear Algebra is one of the recommended sources. But why read, if you can watch him teaching online? MIT’s linear algebra course (often referred to just as “18.06”) proved to be edifying especially for understanding eigenvalues deeper in the few last modules of the first course. I think I should write a review of that as well because I ended up watching most of the lectures.

For the last course (PCA), you need to look into other sources anyway for example to grasp why a covariance matrix is always positive definite. At this point, being aware of the fact that MIT OCW is an intellectual goldmine, a relatively new course also from Professor Strang helped me fill in the details: Matrix Methods in Data Analysis, Signal Processing, and Machine Learning (often referred to just as “18.065”). I watched most of the lectures here as well, but particularly for PCA lectures 4 - 7 are relevant.

5. Conclusion

Was too long to read, hah? No worries, I’ll summarize what’s the catch.

- The Mathematics for Machine Learning specialization taught by three professors from Imperial College of London is worth taking, you’ll get to know to the basics of math required to get started with ML

- The first two courses are easier and the last one is a bit more challenging and requires you to be more proficient in Python

- As neither of the three courses is full graduate-level ones, Professor David Dye mentions this fact right at the beginning, you might feel that you want more details here and there.

- It’s worth taking it. Go ahead and do it! Don't forget to shoot a photo at Imperial when you finished and share it. ;)

Join my Newsletter

If you like Java and Spring as much as I do, sign up for my newsletter.